Data Structures |

| struct | zil_replay_arg |

Defines |

| #define | LWB_EMPTY(lwb) |

| #define | ZILTEST_TXG (UINT64_MAX - TXG_CONCURRENT_STATES) |

| | ziltest is by and large an ugly hack, but very useful in checking replay without tedious work.

|

| #define | USE_SLOG(zilog) |

Typedefs |

| typedef struct zil_replay_arg | zil_replay_arg_t |

Functions |

| | SYSCTL_DECL (_vfs_zfs) |

| | TUNABLE_INT ("vfs.zfs.zil_replay_disable",&zil_replay_disable) |

| | SYSCTL_INT (_vfs_zfs, OID_AUTO, zil_replay_disable, CTLFLAG_RW,&zil_replay_disable, 0,"Disable intent logging replay") |

| | TUNABLE_INT ("vfs.zfs.cache_flush_disable",&zfs_nocacheflush) |

| | SYSCTL_INT (_vfs_zfs, OID_AUTO, cache_flush_disable, CTLFLAG_RDTUN,&zfs_nocacheflush, 0,"Disable cache flush") |

| | TUNABLE_INT ("vfs.zfs.trim_disable",&zfs_notrim) |

| | SYSCTL_INT (_vfs_zfs, OID_AUTO, trim_disable, CTLFLAG_RDTUN,&zfs_notrim, 0,"Disable trim") |

| static void | zil_async_to_sync (zilog_t *zilog, uint64_t foid) |

| | Move the async itxs for a specified object to commit into sync lists.

|

| static int | zil_bp_compare (const void *x1, const void *x2) |

| static void | zil_bp_tree_init (zilog_t *zilog) |

| static void | zil_bp_tree_fini (zilog_t *zilog) |

| int | zil_bp_tree_add (zilog_t *zilog, const blkptr_t *bp) |

| static zil_header_t * | zil_header_in_syncing_context (zilog_t *zilog) |

| static void | zil_init_log_chain (zilog_t *zilog, blkptr_t *bp) |

| static int | zil_read_log_block (zilog_t *zilog, const blkptr_t *bp, blkptr_t *nbp, void *dst, char **end) |

| | Read a log block and make sure it's valid.

|

| static int | zil_read_log_data (zilog_t *zilog, const lr_write_t *lr, void *wbuf) |

| | Read a TX_WRITE log data block.

|

| int | zil_parse (zilog_t *zilog, zil_parse_blk_func_t *parse_blk_func, zil_parse_lr_func_t *parse_lr_func, void *arg, uint64_t txg) |

| | Parse the intent log, and call parse_func for each valid record within.

|

| static int | zil_claim_log_block (zilog_t *zilog, blkptr_t *bp, void *tx, uint64_t first_txg) |

| static int | zil_claim_log_record (zilog_t *zilog, lr_t *lrc, void *tx, uint64_t first_txg) |

| static int | zil_free_log_block (zilog_t *zilog, blkptr_t *bp, void *tx, uint64_t claim_txg) |

| static int | zil_free_log_record (zilog_t *zilog, lr_t *lrc, void *tx, uint64_t claim_txg) |

| static lwb_t * | zil_alloc_lwb (zilog_t *zilog, blkptr_t *bp, uint64_t txg) |

| void | zilog_dirty (zilog_t *zilog, uint64_t txg) |

| | Called when we create in-memory log transactions so that we know to cleanup the itxs at the end of spa_sync().

|

| boolean_t | zilog_is_dirty (zilog_t *zilog) |

| static lwb_t * | zil_create (zilog_t *zilog) |

| | Create an on-disk intent log.

|

| void | zil_destroy (zilog_t *zilog, boolean_t keep_first) |

| | In one tx, free all log blocks and clear the log header.

|

| void | zil_destroy_sync (zilog_t *zilog, dmu_tx_t *tx) |

| int | zil_claim (const char *osname, void *txarg) |

| int | zil_check_log_chain (const char *osname, void *tx) |

| | Check the log by walking the log chain.

|

| static int | zil_vdev_compare (const void *x1, const void *x2) |

| void | zil_add_block (zilog_t *zilog, const blkptr_t *bp) |

| static void | zil_flush_vdevs (zilog_t *zilog) |

| static void | zil_lwb_write_done (zio_t *zio) |

| | Function called when a log block write completes.

|

| static void | zil_lwb_write_init (zilog_t *zilog, lwb_t *lwb) |

| | Initialize the io for a log block.

|

| static lwb_t * | zil_lwb_write_start (zilog_t *zilog, lwb_t *lwb) |

| | Start a log block write and advance to the next log block.

|

| static lwb_t * | zil_lwb_commit (zilog_t *zilog, itx_t *itx, lwb_t *lwb) |

| itx_t * | zil_itx_create (uint64_t txtype, size_t lrsize) |

| void | zil_itx_destroy (itx_t *itx) |

| static void | zil_itxg_clean (itxs_t *itxs) |

| | Free up the sync and async itxs.

|

| static int | zil_aitx_compare (const void *x1, const void *x2) |

| static void | zil_remove_async (zilog_t *zilog, uint64_t oid) |

| | Remove all async itx with the given oid.

|

| void | zil_itx_assign (zilog_t *zilog, itx_t *itx, dmu_tx_t *tx) |

| void | zil_clean (zilog_t *zilog, uint64_t synced_txg) |

| | If there are any in-memory intent log transactions which have now been synced then start up a taskq to free them.

|

| static void | zil_get_commit_list (zilog_t *zilog) |

| | Get the list of itxs to commit into zl_itx_commit_list.

|

| static void | zil_commit_writer (zilog_t *zilog) |

| void | zil_commit (zilog_t *zilog, uint64_t foid) |

| | Commit zfs transactions to stable storage.

|

| void | zil_sync (zilog_t *zilog, dmu_tx_t *tx) |

| | Called in syncing context to free committed log blocks and update log header.

|

| void | zil_init (void) |

| void | zil_fini (void) |

| void | zil_set_sync (zilog_t *zilog, uint64_t sync) |

| void | zil_set_logbias (zilog_t *zilog, uint64_t logbias) |

| zilog_t * | zil_alloc (objset_t *os, zil_header_t *zh_phys) |

| void | zil_free (zilog_t *zilog) |

| zilog_t * | zil_open (objset_t *os, zil_get_data_t *get_data) |

| | Open an intent log.

|

| void | zil_close (zilog_t *zilog) |

| | Close an intent log.

|

| int | zil_suspend (zilog_t *zilog) |

| | Suspend an intent log.

|

| void | zil_resume (zilog_t *zilog) |

| static int | zil_replay_error (zilog_t *zilog, lr_t *lr, int error) |

| static int | zil_replay_log_record (zilog_t *zilog, lr_t *lr, void *zra, uint64_t claim_txg) |

| static int | zil_incr_blks (zilog_t *zilog, blkptr_t *bp, void *arg, uint64_t claim_txg) |

| void | zil_replay (objset_t *os, void *arg, zil_replay_func_t *replay_func[TX_MAX_TYPE]) |

| | If this dataset has a non-empty intent log, replay it and destroy it.

|

| boolean_t | zil_replaying (zilog_t *zilog, dmu_tx_t *tx) |

| int | zil_vdev_offline (const char *osname, void *arg) |

Variables |

| int | zil_replay_disable = 0 |

| | Disable intent logging replay.

|

| boolean_t | zfs_nocacheflush = B_FALSE |

| | Tunable parameter for debugging or performance analysis.

|

| boolean_t | zfs_notrim = B_TRUE |

| static kmem_cache_t * | zil_lwb_cache |

| uint64_t | zil_block_buckets [] |

| | Define a limited set of intent log block sizes.

|

| uint64_t | zil_slog_limit = 1024 * 1024 |

| | Use the slog as long as the logbias is 'latency' and the current commit size is less than the limit or the total list size is less than 2X the limit.

|

ZFS Intent Log.

The zfs intent log (ZIL) saves transaction records of system calls that change the file system in memory with enough information to be able to replay them. These are stored in memory until either the DMU transaction group (txg) commits them to the stable pool and they can be discarded, or they are flushed to the stable log (also in the pool) due to a fsync, O_DSYNC or other synchronous requirement. In the event of a panic or power fail then those log records (transactions) are replayed.

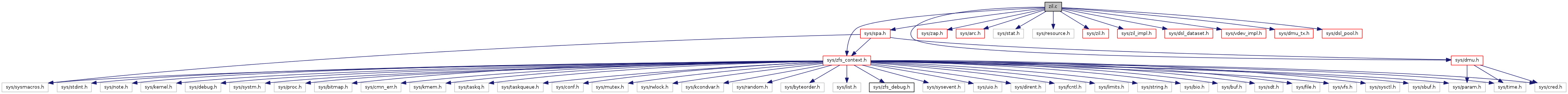

There is one ZIL per file system. Its on-disk (pool) format consists of 3 parts:

- ZIL header

- ZIL blocks

- ZIL records

A log record holds a system call transaction. Log blocks can hold many log records and the blocks are chained together. Each ZIL block contains a block pointer (blkptr_t) to the next ZIL block in the chain. The ZIL header points to the first block in the chain. Note there is not a fixed place in the pool to hold blocks. They are dynamically allocated and freed as needed from the blocks available. Figure X shows the ZIL structure:

Definition in file zil.c.

1.7.3

1.7.3