|

FreeBSD ZFS

The Zettabyte File System

|

|

FreeBSD ZFS

The Zettabyte File System

|

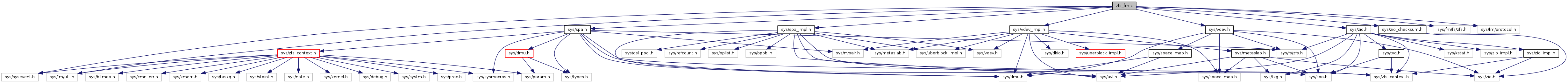

#include <sys/spa.h>#include <sys/spa_impl.h>#include <sys/vdev.h>#include <sys/vdev_impl.h>#include <sys/zio.h>#include <sys/zio_checksum.h>#include <sys/fm/fs/zfs.h>#include <sys/fm/protocol.h>#include <sys/fm/util.h>#include <sys/sysevent.h>

Go to the source code of this file.

Data Structures | |

| struct | zfs_ecksum_info |

| struct | zfs_ecksum_info::zei_ranges |

Defines | |

| #define | ZFM_MAX_INLINE (128 / sizeof (uint64_t)) |

| #define | MAX_RANGES 16 |

Typedefs | |

| typedef struct zfs_ecksum_info | zfs_ecksum_info_t |

Functions | |

| static void | zfs_ereport_start (nvlist_t **ereport_out, nvlist_t **detector_out, const char *subclass, spa_t *spa, vdev_t *vd, zio_t *zio, uint64_t stateoroffset, uint64_t size) |

| This general routine is responsible for generating all the different ZFS ereports. | |

| static void | update_histogram (uint64_t value_arg, uint16_t *hist, uint32_t *count) |

| static void | shrink_ranges (zfs_ecksum_info_t *eip) |

| We've now filled up the range array, and need to increase "mingap" and shrink the range list accordingly. | |

| static void | add_range (zfs_ecksum_info_t *eip, int start, int end) |

| static size_t | range_total_size (zfs_ecksum_info_t *eip) |

| static zfs_ecksum_info_t * | annotate_ecksum (nvlist_t *ereport, zio_bad_cksum_t *info, const uint8_t *goodbuf, const uint8_t *badbuf, size_t size, boolean_t drop_if_identical) |

| void | zfs_ereport_post (const char *subclass, spa_t *spa, vdev_t *vd, zio_t *zio, uint64_t stateoroffset, uint64_t size) |

| void | zfs_ereport_start_checksum (spa_t *spa, vdev_t *vd, struct zio *zio, uint64_t offset, uint64_t length, void *arg, zio_bad_cksum_t *info) |

| void | zfs_ereport_finish_checksum (zio_cksum_report_t *report, const void *good_data, const void *bad_data, boolean_t drop_if_identical) |

| void | zfs_ereport_free_checksum (zio_cksum_report_t *rpt) |

| void | zfs_ereport_send_interim_checksum (zio_cksum_report_t *report) |

| void | zfs_ereport_post_checksum (spa_t *spa, vdev_t *vd, struct zio *zio, uint64_t offset, uint64_t length, const void *good_data, const void *bad_data, zio_bad_cksum_t *zbc) |

| If we have the good data in hand, this function can be used. | |

| static void | zfs_post_common (spa_t *spa, vdev_t *vd, const char *name) |

| void | zfs_post_remove (spa_t *spa, vdev_t *vd) |

| The 'resource.fs.zfs.removed' event is an internal signal that the given vdev has been removed from the system. | |

| void | zfs_post_autoreplace (spa_t *spa, vdev_t *vd) |

| The 'resource.fs.zfs.autoreplace' event is an internal signal that the pool has the 'autoreplace' property set, and therefore any broken vdevs will be handled by higher level logic, and no vdev fault should be generated. | |

| void | zfs_post_state_change (spa_t *spa, vdev_t *vd) |

| The 'resource.fs.zfs.statechange' event is an internal signal that the given vdev has transitioned its state to DEGRADED or HEALTHY. | |

| typedef struct zfs_ecksum_info zfs_ecksum_info_t |

| static void add_range | ( | zfs_ecksum_info_t * | eip, |

| int | start, | ||

| int | end | ||

| ) | [static] |

| static zfs_ecksum_info_t* annotate_ecksum | ( | nvlist_t * | ereport, |

| zio_bad_cksum_t * | info, | ||

| const uint8_t * | goodbuf, | ||

| const uint8_t * | badbuf, | ||

| size_t | size, | ||

| boolean_t | drop_if_identical | ||

| ) | [static] |

| static size_t range_total_size | ( | zfs_ecksum_info_t * | eip | ) | [static] |

| static void shrink_ranges | ( | zfs_ecksum_info_t * | eip | ) | [static] |

We've now filled up the range array, and need to increase "mingap" and shrink the range list accordingly.

zei_mingap is always the smallest distance between array entries, so we set the new_allowed_gap to be one greater than that. We then go through the list, joining together any ranges which are closer than the new_allowed_gap.

By construction, there will be at least one. We also update zei_mingap to the new smallest gap, to prepare for our next invocation.

| static void update_histogram | ( | uint64_t | value_arg, |

| uint16_t * | hist, | ||

| uint32_t * | count | ||

| ) | [static] |

| void zfs_ereport_finish_checksum | ( | zio_cksum_report_t * | report, |

| const void * | good_data, | ||

| const void * | bad_data, | ||

| boolean_t | drop_if_identical | ||

| ) |

| void zfs_ereport_free_checksum | ( | zio_cksum_report_t * | rpt | ) |

| void zfs_ereport_post_checksum | ( | spa_t * | spa, |

| vdev_t * | vd, | ||

| struct zio * | zio, | ||

| uint64_t | offset, | ||

| uint64_t | length, | ||

| const void * | good_data, | ||

| const void * | bad_data, | ||

| zio_bad_cksum_t * | zbc | ||

| ) |

| void zfs_ereport_send_interim_checksum | ( | zio_cksum_report_t * | report | ) |

| static void zfs_ereport_start | ( | nvlist_t ** | ereport_out, |

| nvlist_t ** | detector_out, | ||

| const char * | subclass, | ||

| spa_t * | spa, | ||

| vdev_t * | vd, | ||

| zio_t * | zio, | ||

| uint64_t | stateoroffset, | ||

| uint64_t | size | ||

| ) | [static] |

This general routine is responsible for generating all the different ZFS ereports.

The payload is dependent on the class, and which arguments are supplied to the function:

EREPORT POOL VDEV IO block X X X data X X device X X pool X

If we are in a loading state, all errors are chained together by the same SPA-wide ENA (Error Numeric Association).

For isolated I/O requests, we get the ENA from the zio_t. The propagation gets very complicated due to RAID-Z, gang blocks, and vdev caching. We want to chain together all ereports associated with a logical piece of data. For read I/Os, there are basically three 'types' of I/O, which form a roughly layered diagram:

+---------------+ | Aggregate I/O | No associated logical data or device +---------------+ | V +---------------+ Reads associated with a piece of logical data. | Read I/O | This includes reads on behalf of RAID-Z, +---------------+ mirrors, gang blocks, retries, etc. | V +---------------+ Reads associated with a particular device, but | Physical I/O | no logical data. Issued as part of vdev caching +---------------+ and I/O aggregation.

Note that 'physical I/O' here is not the same terminology as used in the rest of ZIO. Typically, 'physical I/O' simply means that there is no attached blockpointer. But I/O with no associated block pointer can still be related to a logical piece of data (i.e. RAID-Z requests).

Purely physical I/O always have unique ENAs. They are not related to a particular piece of logical data, and therefore cannot be chained together. We still generate an ereport, but the DE doesn't correlate it with any logical piece of data. When such an I/O fails, the delegated I/O requests will issue a retry, which will trigger the 'real' ereport with the correct ENA.

We keep track of the ENA for a ZIO chain through the 'io_logical' member. When a new logical I/O is issued, we set this to point to itself. Child I/Os then inherit this pointer, so that when it is first set subsequent failures will use the same ENA. For vdev cache fill and queue aggregation I/O, this pointer is set to NULL, and no ereport will be generated (since it doesn't actually correspond to any particular device or piece of data, and the caller will always retry without caching or queueing anyway).

For checksum errors, we want to include more information about the actual error which occurs. Accordingly, we build an ereport when the error is noticed, but instead of sending it in immediately, we hang it off of the io_cksum_report field of the logical IO. When the logical IO completes (successfully or not), zfs_ereport_finish_checksum() is called with the good and bad versions of the buffer (if available), and we annotate the ereport with information about the differences.

| void zfs_ereport_start_checksum | ( | spa_t * | spa, |

| vdev_t * | vd, | ||

| struct zio * | zio, | ||

| uint64_t | offset, | ||

| uint64_t | length, | ||

| void * | arg, | ||

| zio_bad_cksum_t * | info | ||

| ) |

The 'resource.fs.zfs.statechange' event is an internal signal that the given vdev has transitioned its state to DEGRADED or HEALTHY.

This will cause the retire agent to repair any outstanding fault management cases open because the device was not found (fault.fs.zfs.device).

1.7.3

1.7.3