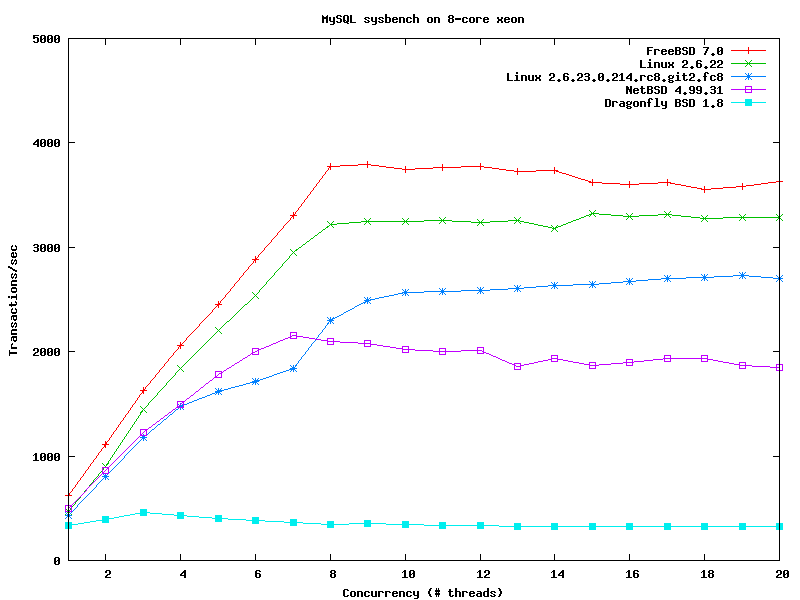

In May 2007 I ran some benchmarks of Dragonfly 1.8 to evaluate progress of its SMP implementation, which was the original focus of the project when it launched in 2003 and is still widely believed to be an area in which they had made concrete progress. This was part of a larger cross-OS multiprocessor performance evaluation comparing improvements in FreeBSD to Linux, NetBSD and other operating systems.

The 2007 results showed essentially no performance increase from multiple processors on dragonfly 1.8, in contrast to the performance of FreeBSD 7.0 which scaled to 8 CPUs on the benchmark.

Recently Dragonfly 1.12 was released, and the question was raised on the dragonfly-users mailing list of how well the OS performs after a further year of development. I performed several benchmarks to study this question.

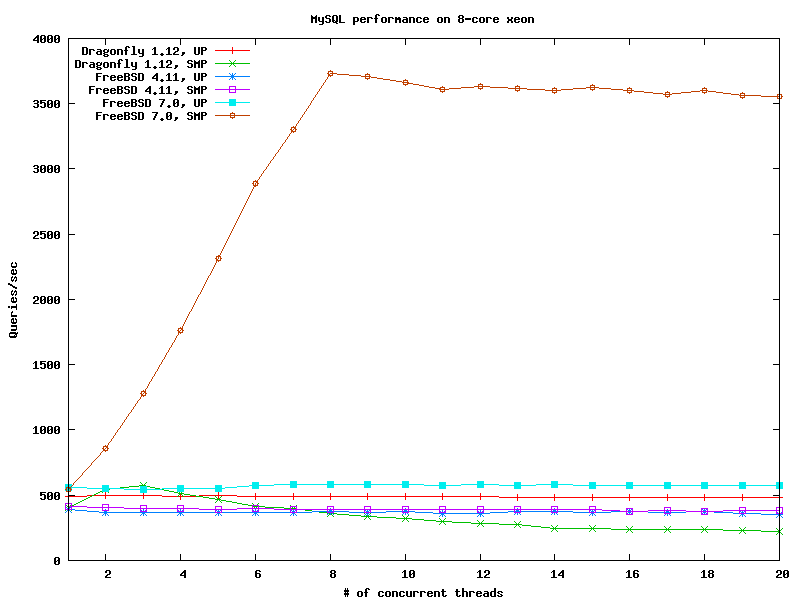

In this round of testing I compared Dragonfly 1.12, FreeBSD 4.11 and FreeBSD 7.0, running on the same 8-core Xeon hardware. On Dragonfly the GENERIC kernel configuration was used except for enabling SMP and APIC_IO (for the SMP tests), and removing I486_CPU. Under FreeBSD the GENERIC kernel was used except for enabling the SCHED_ULE scheduler on 7.0, removing I486_CPU and enabling SMP when appropriate. The test applications were compiled from ports/pkgsrc and the same versions and configuration options used for each OS.

MySQL configuration is the same as in my previous test and is also documented here

Here are the results:

Dragonfly 1.12 achieves peak SMP performance of only 15% better than UP performance, and drops to about 50% below UP performance at higher loads. Enabling SMP has a 20% performance overhead on this benchmark.

UP mode is faster than 4.11 when using the libthread_xu library. With libc_r (not graphed) performance is identical to 4.11 in both UP and SMP mode, so the UP performance increase is most likely due to the thread library.

Note: I am using mysql 5.0.51 in the current tests, which has different performance characteristics than the older 5.0.37 tested last year, so the current data cannot directly be compared to the previous dragonfly 1.8 graphs to evaluate whether a small amount of progress was made since 1.8. However, there does not appear to be any significant performance improvement from dragonfly 1.8 to 1.12.

FreeBSD 7.0 scales to 8 CPUs on this benchmark. Peak performance is 6.5 times higher than peak dragonfly performance, and 9.0 times higher than FreeBSD 4.11 performance. UP performance is consistent with SMP performance with a single thread. 7.0 UP is 45% faster than 4.11 UP and 10% faster than dragonfly UP.

Note that while these benchmarks are on a test system with 8 CPU cores, the results also provide information about performance on systems with fewer than 8 cores, such as dual core systems. If the system does not show appreciable performance gain when 2 threads are active and most CPUs are idle, it is unlikely to perform much better when the system only has 2 CPUs. I could not test this directly because I don't know how to disable CPUs at boot time/run time in dragonfly.

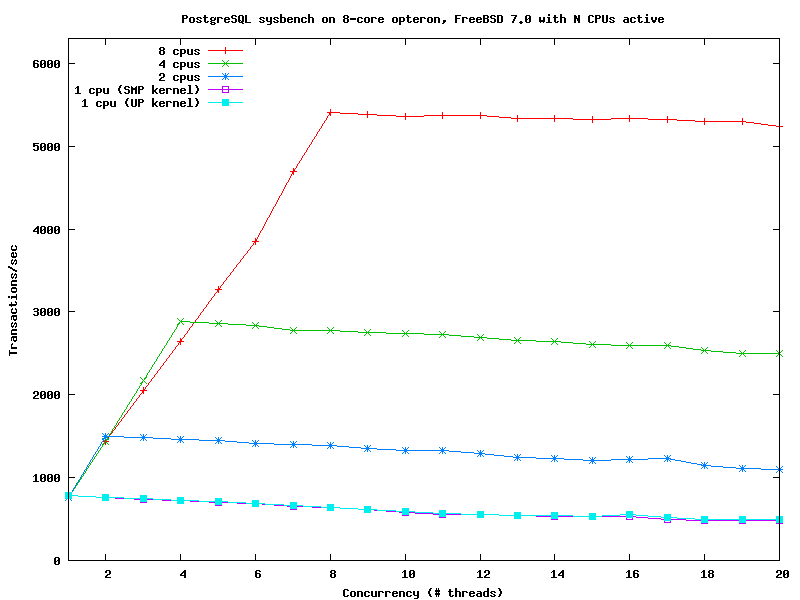

For example, this graph shows FreeBSD 7.0 running postgresql on the same system with 1, 2, 4 or 8 CPU cores active, as well as comparing the UP and SMP kernel running with 1 CPU active

The performance seen with 8 CPUs also scales down to 1, 2 and 4 CPUs. This also shows that there is negligible overhead from running the FreeBSD 7.0 SMP kernel on a UP system on this workload.

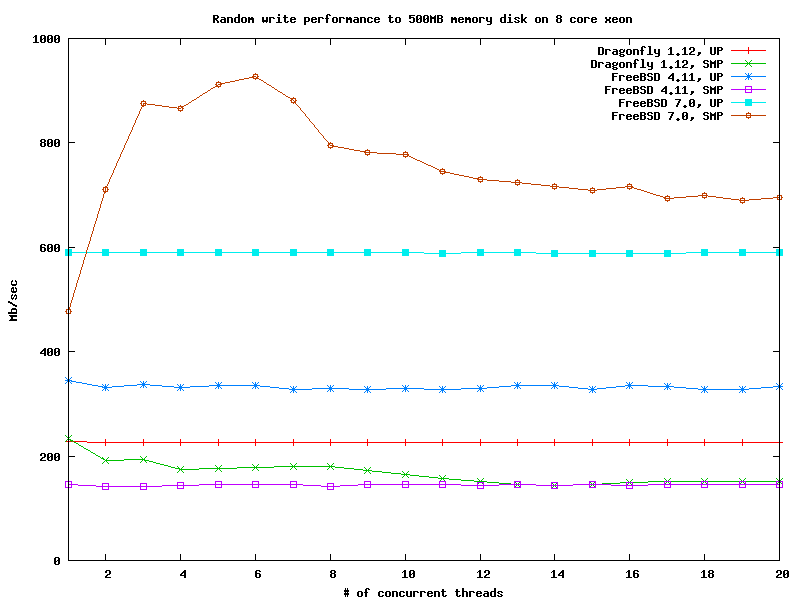

Currently the major focus of the dragonfly project is the development of a new filesystem, so it's interesting to see how well the dragonfly filesystem layer performs. I created a 500MB memory filesystem (MFS) and used sysbench to perform multi-threaded random write I/O. It seems that MFS cannot create file systems larger than 500MB, which was a limiting factor on dragonfly and 4.11.

Dragonfly 1.12 UP performance is about 30% lower than FreeBSD 4.11 UP performance. Enabling SMP does not impose an overhead on this test, but there is no performance benefit seen from multiple threads, and instead performance drops as low as FreeBSD 4.11 SMP performance at high loads.

FreeBSD 7.0 does not support the MFS file system; the nearest alternative is the tmpfs filesystem which was used for this test.

The sysbench file I/O benchmark apparently has a race condition that cause it to abort under high I/O load (enabling debugging shows that sysbench is sometimes generating I/O requests that are out of range of the specified test parameters such as file size and number of files, so this appears to be a sysbench bug). This was only a factor on 7.0 but the benchmarks were averaged over 5 trials to reduce error.

FreeBSD 7 scales to 3 simultaneous writers and peak SMP performance is a factor of 4 times higher than dragonfly peak performance and 2.6 times higher than freebsd 4 performance. FreeBSD 7 peak performance may be limited by memory bandwidth rather than kernel scaling limitations, although those come into play at higher loads.

FreeBSD 7.0 has a 20% overhead from SMP on this test compared to UP, which may be because the lockmgr primitive has not yet been optimized for SMP mode (this work is in progress). However, SMP performance exceeds UP with a second writer, and SMP remains 17% faster than UP at high loads.

UP performance on FreeBSD 7 is 2.6 times higher than dragonfly UP performance and 1.8 times higher than freebsd 4 UP performance. It is unknown how much of this difference is due to the different design of the tmpfs filesystem.

Networking is the major subsystem that has received SMP development work in dragonfly, although it was never completed and still does not allow concurrency in network processing (FreeBSD has a parallelized network stack, and further performance work is ongoing in FreeBSD 8.0).

I did not directly test network performance as e.g. a DNS or web server. I can do this if someone is interested.

NFS performance on this hardware was anomalously low in both 4.11 and dragonfly, averaging only about 300KB/sec on an intel gigabit ethernet NIC. This did not impact the other benchmarks because they were not performing NFS I/O.

The assertion is often made by dragonfly project supporters that dragonfly has "much better" stability than FreeBSD. It is not clear by what metric this is being objectively evaluated (if at all). A direct measurement of stability is desirable.

One measure of system stability is the ability to function correctly under extreme overload conditions. This tends to provoke race conditions and other exceptions at a higher frequency than at the light loads encountered on desktop systems.

To simulate system overload I ran the stress2 benchmark suite on the 8-core xeon. This is a suite of test applications that impose massive overload on the system under various concurrent workloads. FreeBSD is able to run this test suite indefinitely without errors.

The first problem was encountered while trying to unpack the stress2 archive to NFS:

# ls

stress2.tgz

# tar xvf stress2.tgz

tar: Error opening archive: Failed to open 'stress2.tgz': No such file or directory

# ls -l

ls: stress2.tgz: No such file or directory

total 0

This looks like it might be a bug with the dragonfly name cache.

After starting the stress suit, the system panicked in under 4 minutes. Unfortunately I was unable to obtain details of the panic because the serial console was not working.

Obviously one panic does not demonstrate wide-ranging system instability, but it does point to a possible selection bias amongst the project supporters, who may not be looking hard enough for the stability problems that exist.

As with the dragonfly 1.8 kernel, the dragonfly 1.12 kernel does not scale to a second CPU on the benchmarks performed, and the limited SMP implementation can cause a large performance loss at higher loads. There is sometimes a large performance overhead from enabling SMP compared to UP, and performance was sometimes worse than that of 4.11. In all cases measured, FreeBSD 7.0 performs significantly better than both FreeBSD 4.11 and dragonfly 1.12 in both SMP and UP configurations.